Can AI See the World the Way We Do?

Article 19 probes the future of AI-driven alt text for images and more

AI has the potential to bring people who are blind or who have low vision closer and closer to the visual world. Joined by Vice-Chair of Be My Eyes, Bryan Bashin, and Tamman document accessibility specialist, Liza Grant, we explore the differences of alt text written by a trained human and AI and what the future of AI looks like for blind and low-vision individuals.

Meet our guests:

- Bryan Bashin has a long career working in technology and community outreach, and he is passionate about using innovation to solve problems faced by marginalized groups.

- Liza Grant is a Document Accessibility Specialist at Tamman, a team lead, and a strong accessibility advocate

Images featured in the podcast

First Image

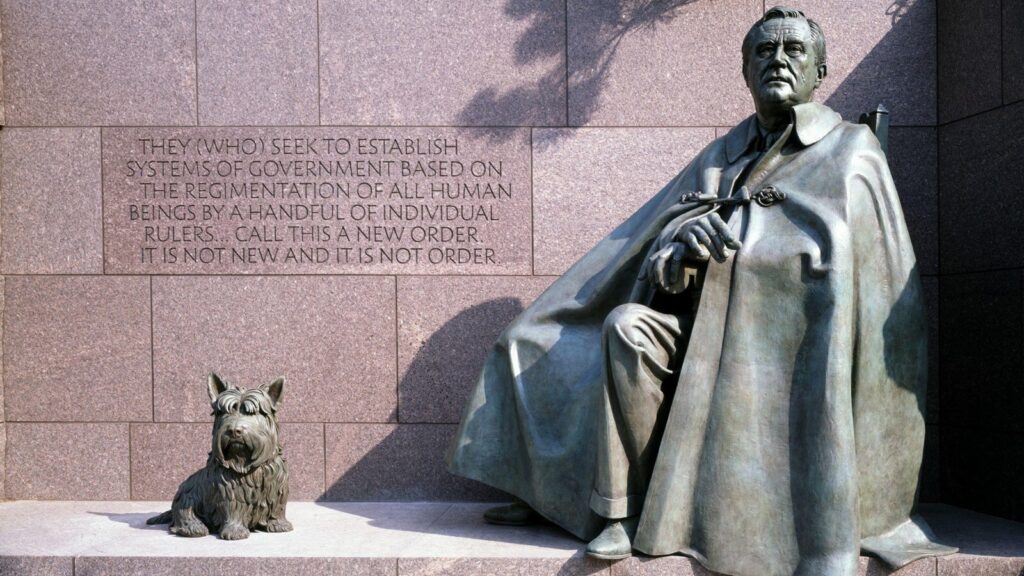

Second Image

Listen to more Article 19 Podcast Episodes

Full Transcript

Access the PDF Transcript

Narrator Kristen:

Hello everyone, and welcome to Article 19! I’m Kristen Witucki, content creator and accessibility specialist at Tamman, and I’m also the host for our conversation today. AI is everywhere. I just attended a conference where, without going back to check, I can comfortably say that at least 80% of the sessions were about AI. And that is a conservative estimate. AI has officially exploded, and now everyone wants to have a guess about whether and how it’s going to be the next big thing. But what if the AI is really accessible and inclusive? AI has the potential to bring people who are blind or who have low vision closer and closer to the visual world. For the first time in my 43 years of life, I am able to describe an image I have not actually seen, because AI gives me a template that I can thoughtfully edit and then double-check. But there is also a real danger that AI will reinforce all of the biases that have kept people with disabilities un- or underemployed and profoundly misunderstood.

AI voice:

The design of the statue is meant to honor his leadership while acknowledging his disability in a dignified manner.

Narrator Kristen:

How can we harness the power of AI to bring about true accessibility and inclusion? Article 19 is a call for others to join us in a bigger conversation around the ADA, digital accessibility, and access to information. At Tamman, we’re working to build the inclusive web every day. But to do that, we need all of us working together and learning together. Thank you so much for going with us on this journey!

Produced Introduction with music bed:

Expression is one of the most powerful tools we have. A voice, a pen, a keyboard. The real change which must give to people throughout the world their human rights must come about in the hearts of people. We must want our fellow human beings to have rights and freedoms which give them dignity. Article 19 is the voice in the room.

Narrator Kristen:

Without question, AI has been the “talk of the town” inside and outside the tech world. As a blind woman, I assumed it had very little to do with me, and in one sense, which we’ll get to later, I was right. For years Facebook has used rudimentary descriptions that say “may be an image of something.” Some people. A tree. A sunset When the person was writing about dawn. Then Be My Eyes alerted the world about its AI. So of course, I was curious, and I cynically thought, “Here we go again.” But I was wrong.

Be My Eyes AI:

Be My AI. The image shows a gold pendant necklace. The pendant is circular with a texture and features various small

Narrator Kristen:

That sentence alone is progress. Startling progress. Be My AI has revolutionized the world for people who are blind or who have low vision. Suddenly we can find out everything from what that silly meme actually says, to prescription information, (not recommended but often used anyway) to a description of a loved one. By the way, I haven’t tried describing my children yet with Be My AI. First, I’m worried I might cry. And secondly, I’m worried that the description will either be devoid of emotion or will have insincere emotion. Again we’ll get to that later. I asked Bryan Bashin, the vice-chair of Be My Eyes, what people love about AI.

Kristen Witucki:

How would you bring the potential that AI has in adding to the conversation?

Bryan Bashin:

Enormous and unprecedented. I mean, I joined Be My Eyes in part, to get AI on our system. We didn’t know how much people would use it compared to human volunteers. When you have, like, I say, seven and a half million volunteers answering a zillion questions every day, why would anybody use AI? Well, it turned out that people really, really started using AI about this time last year. And the rate of AI queries is now about 2.2 million queries a month. That’s almost a query every second around the clock. And some of us said, Well, this is going to cannibalize the volunteer stuff. Volunteer usage didn’t go down. It went up, but the AI usage really exploded. And the last statistics I saw says that for every volunteer call we receive, we receive seven queries for AI. So it dwarfs the volunteer side, which is already enormous. Why are people using AI instead of volunteers? Well, we hear from our users a number of things. First of all, like maybe don’t want to bother a human. There is a micro bit of emotion in like, engaging with any random stranger. Oh, I have to explain. I can’t see this. Help me with this. Maybe I don’t want to do that for the umpteenth time every day. Maybe I just want to talk to a robot. We’ve also heard, well, maybe I don’t want to invite a human stranger into my kitchen to look at something because it’s messier than I would like. Or maybe there’s somebody else in the in the room or something that I don’t doesn’t want to be part of that conversation. So for all kinds of reasons, people would rather, often, just get the objective output of AI, even though it might take several queries to get it right, but that’s what we’re finding.

Narrator Kristen:

As a Be My Eyes executive and a frequent user of both the human volunteers and the technology, Bryan communicates this ebullient feeling that both humans and AI are fundamentally necessary to good description.

Bryan Bashin:

As a blind guy myself, I use it all the time. And just, you know, we live in a world that we want to live in actively. And that means, you know, working with inaccessible screens, devices, printed stuff, all the ways in which blind people were never considered. So I love not only the utility of the human volunteers, but also sort of magic of you never know who you’re going to get. You’re genuinely meeting somebody who is a neat, kind person who wants to help, and suddenly you pop up on their screen, and you have this little project together and be my as we call these random acts of kindness. Hey, could you help me understand this? Hey, when does this milk expire? Can I change the setting on my irrigation timer? All these things. And we’ll talk mostly about AI later on. But the human brain is such a great machine for analogies and can interpolate when the AI can’t yet do that well. So I love that. I there’s a little magic that happens every time. You know, what city are you in? You know if I’m needing this help, say late at night California time, I’m going to be talking more than likely to an Australian who’s in the time zone in the middle of their day. And so there’s there’s also that connectivity too.

Narrator Kristen:

I asked our document accessibility specialist team lead, Liza Grant, to talk more about the nuts and bolts of writing a good description.

Kristen Witucki:

Can you tell us a little bit about what your life is like writing alt text and maybe some of the things that help you to write it?

Liza Grant:

Sure. I write a fair amount of alt text in my day-to-day. I would say the most important thing for you to use when you’re writing it, is a little bit of your own common sense. The main thing that will help you when you’re trying to figure out what to write is looking at the context of your image. What are you saying around it? What is the purpose? It really helps to have context when you’re trying to figure out, what are the most meaningful, most important parts of the image that you want to make sure that you reference when you’re writing your alt text. So there’s a little bit of reading comprehension that goes on. A little bit of usually, if you’re the author yourself, you have a little bit of an easier time figuring out the alt text, because you know what you want your message to be, and you know what the important thing is that this image adds to the rest of your content. So yeah, there are some guides and things online, but a lot of times you’re just going to google anything specific in the image that seems relevant and just think about the rest of your piece and what this image adds.

Kristen Witucki:

Definitely needs to be more than just the code that names the image or just like picture of a cat or something, but shouldn’t be a book either.

Liza Grant:

Right ? A lot of times when you’re depending on the image, maybe you’re looking at that image for one second. Maybe it’s a little graphic on a PDF that you’re reading, and it’s not very important. Or maybe it’s on someone’s Instagram, and then you want to spend a lot more time looking at it, because the picture is the main part of the content you’re going to write longer alt text, you might have include an image description on there, just because you’re the user’s meant to spend more time on that piece of content. So that goes into it as well. What is your platform? It’s also an important thing to think about.

Narrator Kristen:

I met with our social media project manager, and she and I talked about general categories that we thought might be great for different kinds of descriptions and different ways to engage with the AI. We came up with a couple of general categories, a product and a monument. And if once we came up with those categories, Sydney, who’s not on the air, picked out the photos, and sent them to Markus, the producer who is here with us, and He sent them to Liza, and we pretty close to the meeting, so she had a little bit of time to write alt text, but not a ton of time. And I haven’t opened these photos yet. I promise I have not. So I’m pretty excited to see what everyone came up with. I did load her description, Liza description, so I can read them aloud in Braille, so we won’t have to listen to the screen reader all the time. So I’ll read the human-created descriptions, and then on the AI side, I will have my phone using VoiceOver to describe the photo with Be My Eyes. Quick disclaimer, AI is updating literally every minute, possibly every second. And so, if you found these photos and ran them through AI yourself, the descriptions might not be the same as the ones I’m getting. So, you know, I don’t know if that means anything, but this is just kind of a fun discussion, and we’ll talk about what the human got out of the photo and what the AI got of the photo, and just compare them.

Kristen Witucki:

So here’s Liza description, a circular golden pendant on a golden chain. The 12 astrological sign symbols make a smaller circle inside the pendant, and each associated birthstone sits above them. Huh? All right, what do you think of this? Would you buy this pendant, Liza?

Liza Grant:

You know, I might buy this pendant, lean into a little spiritual side of myself? Yeah, some fashion happening. I’ve got a lot of silver jewelry. Got a lot of silver pendants with all 12 astrological signs on them, but no gold. So this actually sounds like it would fit right in with my collection.

Kristen Witucki:

So I’m just thinking about the wording in the description is beautiful, by the way. And okay, so the signs make a smaller circle interdependent, and each associated percent it’s above them. Okay, so does that mean above them? That like the stones are like along the border of the circle?

Liza Grant:

Great question, because I actually was struggling with this a little bit when I wrote it. So you can imagine it. We have the circular pendant. There’s a smaller circle, kind of inside that of the birthstones, and then smaller circle inside that has the different astrological signs.

Kristen Witucki:

Yeah. Okay. Well, I can see, I mean, I think that’s really cool. Like to hear about how you decided on that order of operations, and you kind of went from the inside out. And I think that works really well. And I also think going from the outside in would probably work too. Like I think there’s more than one way to do this.

Liza Grant:

Definitely, and I kind of I guess thinking about it, a lot of people will sometimes do an approach like that, where it’s you’re starting from one end of something and kind of going to the other, outside in, inside out. And I think I was kind of thinking about going from the most broad thing, maybe to the most particular thing. But then it’s like, yeah. So there it is, overall. But let me tell you about some of the things that make this unique, where you’ll decide whether you want it or not based off of these smaller things.

Kristen Witucki:

And I’m also thinking that if you think about the stones versus the signs, the signs are probably a little more important than the stones. Like, the reason someone would buy this necklace would most likely be they like astrology first, rather than like the birthstones being the most important thing, so I could see why you do the signs?

Liza Grant:

I had not even thought of that, but that makes a lot of sense. Where the signs, for a lot of people, have more meaning, there’s a lot more meaning associated with those, and even like aesthetic choices then just a birthstone, which pretty much everyone has one. It’s not like you’re into it or not as much. It’s less of a statement. So I hadn’t even thought about that, but that’s that’s very correct.

Narrator Kristen:

Liza and I can go on forever with this type of analysis and frequently do, mostly on Slack. But we wanted to hear what the AI had to say.

Kristen Witucki:

Alright everybody, now that we have the human description of this pendant, let’s see if AI is similar or different, or, you know, let’s compare them.

Be My Eyes AI:

Back button. Product JPEG. Actions menu. Done. Button.

Kristen Witucki:

Just so you know, I have the voiceover set at about 50% which is, I thought, a pretty good rate for people who are maybe a little less familiar with listening to things on a screen reader to be able to understand it. I have my general daily rate at about 70, and I know blind people who have it much faster than that. So it’s all kind of on a continuum. But I thought this was sort of a good mid-point for hearing it, but not hearing it too slowly. So I’m going to go to share.

Be My Eyes AI:

Image profile. Share. Button. Share

Kristen Witucki:

But, I’m not actually sharing it. I’m going to go to describe with Be My Eyes.

Be My Eyes AI:

Be My AI. The image shows a gold pendant necklace. The pendant is circular with the textured engine features various small, colorful gemstones arranged in a circular pattern around the center. Each gemstone is set at the end of the line radiating from the center, resembling a clock face. The center of the pendant has a small clear gemstone. Around the gemstones, there are small symbols or characters engraved into the gold surface. The pendant hangs from a delicate gold chain.

Kristen Witucki:

Huh. that is a very fascinating description.

Liza Grant:

It covered a lot of the items.

Kristen Witucki:

It did. It did. it really focused on the gemstones the most. It did not realize what the symbols were in that first take.

Liza Grant:

It did not. There are lines radiating out from the center. So it did see that,

Kristen Witucki:

I did like that. It saw that there was a center gem. Is there a center gemstone?

Liza Grant:

Yeah, there is a center gemstone. I didn’t even mention that because I don’t know what that is, but yes, there is a little clear gemstone in the middle.

Kristen Witucki:

Okay. Well, the cool part about AI is I can ask it a question. I think I’m going to ask it a question on this one. So let’s see.

Be My Eyes AI:

Insertion helps dictate

Kristen Witucki:

What symbols are on the pendant? Question Mark.

Be My Eyes AI:

Inserted, what symbols are on the pendant?

Kristen Witucki:

Okay.

Be My Eyes AI:

Message, clear tag, send message. Button, please, wait. Be my AI. Is writing a message? [Space: AI working in real time.] Be my AI, the symbols on the pendant appear to be astrological zodiac signs. Each sign is positioned near a corresponding gemstone around the circle. Here is a list of the symbols typically found on such pendants. Aries, Taurus, Gemini, cancer, Leo, Virgo, Libra, Scorpio, Sagittarius, Capricorn, Aquarius, Pisces. Each symbol is associated with a specific gemstone on the pendant.

Kristen Witucki:

Wow. Okay,

Liza Grant:

Got that pretty on the nose.

Kristen Witucki:

Got that after a follow-up. But if I hadn’t asked, it would have totally missed the Zodiac.

Liza Grant:

We argued, was one of the more important things about it. So that would explain it.

Kristen Witucki:

Yeah. Another thing that’s interesting is that it says, Here is a list of these, the symbols that are typically found on these types of pendants, which to me, is a little bit, throws me a little bit off, because I’m like, if it’s typically found, does that mean this pendant has them, or does it mean that it could have them? Maybe it does not have them.

Liza Grant:

Right? Or it has one but not the others. I’m an Aries. I don’t know if you can tell by my stubborn demeanor on this podcast, but yeah, if it had Libra and Virgo, that’s cool, but maybe that’s not the necklace that I would want. So then you might even have to get into asking it more questions of like, “which symbols are on this pendant?” And maybe it would be able to tell you. I will say, I feel like with engraving, that can be especially difficult because you don’t really have color contrast to go off of. It’s gold with gold writing on it. But it was still able to figure it out, at least to a certain extent. So.

Kristen Witucki:

I did ask what symbols are on it, so since it said they were typically on it, it didn’t quite answer the question,

Liza Grant:

It hedged a little bit.

Kristen Witucki:

It did, yeah. It was worried.

Liza Grant:

Yeah. I’m sure AI feels bad when it gets things wrong, just like we do. So that makes sense to me from a human perspective,

Kristen Witucki:

Maybe I should ask it another question, just for fun.

Be My Eyes AI:

Set. Dictate.

Kristen Witucki:

What is the center gemstone? question mark

Be My Eyes AI:

Inserted. What is the center gemstone message? Clear text sent message. So please wait. Be my AI. Is writing a message. Be my AI. The center gemstone of the pendant is a small clear gemstone. Likely a diamond or a clear crystal.

Kristen Witucki:

Again, we don’t know.

Liza Grant:

A little bit of a hedge too, but I will say I didn’t even mention the crystal in the middle. I’m looking closer at the pendant now, and I think it has little designs around the center. Should make it kind of seem like it’s a sun, or at least some kind of star. So there was a little meaning that the human missed, because I was a little focused on the other stuff around it. But I would probably myself, be a little bit nebulous on what type of crystal that is, just because it’s not a birthstone that I know of. So we kind of have to give it our best guess, just like the AI did.

Kristen Witucki:

I also love that you notice the sun, even though you didn’t notice it. Your initial description, the whole idea of the sun being in the middle and AI totally missed that,

Liza Grant:

It couldn’t really see the sun etchings very well, kind of like I didn’t notice the sun etchings very well. So, yeah, it can find things, and then the context sometimes is there and sometimes it’s not.

Kristen Witucki:

Well, we can ask Sydney where she found this and maybe link to the post.

Liza Grant:

Or, maybe this is from her personal collection.

Kristen Witucki:

Give this website a little business.

Liza Grant:

Yes. Yes. Why Not?

Kristen Witucki:

I’m going to ask it one more question just for kicks and then we can take it out later. But. What stone is above the Aries sign? Question mark.

Be My Eyes AI:

Inserted, what stone is above the Aries sign message, clear. Sent message, please, wait, be my AI is… Be my AI. The gemstone above the Aries sign on the pendant is a small purple stone, likely an amethyst.

Kristen Witucki:

[Laughter] Wait, that’s not an Aries.

Liza Grant:

It’s not Aries. But I will say the Aries, obviously, they’re kind of the astrological signs don’t fit within exactly one month. So the stones are sort of in but each sign has a stone to its left and a stone to its right. Um, so I will say Aries looks to have two pretty clear stones on either side of it. There’s a purplish one, one over not, not next to it. Just be interpreting one of the more clear-looking ones as a very light purple that I’m not seeing on my screen.

Kristen Witucki:

Well, there is so, I think that I know So Aries is,

Liza Grant:

Oh, Aquamarine. I just remembered March.

Kristen Witucki:

For March, and then a diamond for April.

Liza Grant:

Okay, diamond for April looks, looks like it could be a diamond, and maybe we’ve got a real light aquamarine with the facets. You got to take into account the facets when you’re working with gem. Alt text. Could make it seem like it’s a light purple or something.

Kristen Witucki:

This is a little bit different than your client work. I don’t think you describe…

Liza Grant:

Yeah, I work with a lot of financial tech stuff, and we don’t usually get to get into this, this amount of color.

Narrator Kristen:

AI gave a detailed description of the pendant Sydney chose. And to be fair, it did a good job. Especially with the sun symbol and the center stone. However, it got a little sidetracked by focusing wrongly on the stones over the Zodiac signs and it couldn’t quite figure out the orientation of the ornamentation. Liza focused on astrology as the primary purpose of the pendant and conveyed the important details of the piece. In general, she writes descriptions for financial documents, not for jewelry websites. But Liza knew that I’d be interested in the pendant, because we occasionally get sidetracked on artistry, and she tailored the description to her audience, me. So now, on to our monument.

Kristen Witucki:

Okay, so just for kicks, I do have Liza’s description of our final photo ready to read. But I thought I’d switch it up a little bit and start with the AI first this time. And we will see what it comes up with, and then what Liza came up with ahead of time. So I’m going to open the monument photo.

Be My Eyes AI:

Describe with Be My Eyes. Cancel. Cancel. But please wait. Message. Text Field. [six second pause] Be My AI. The image shows a bronze statue of Franklin D Roosevelt, the 32nd President of the United States, seated in a chair with a cloak draped over his shoulders. Next to him is a smaller statue of a dog, which is his Scottish Terrier named Fauci. The statues are part of the Franklin Delano Roosevelt Memorial in Washington, D. C. Behind the statues, there is an inscription on the wall that reads, “They who seek to establish systems of government based on the regimentation of all human beings by a handful of individual rulers. Ellipsis, call this a new order. It is not who, and it is not order.”

Kristen Witucki:

Huh.

Liza Grant:

Wow. That’s certainly what that is.

Kristen Witucki:

It’s very factual about it, though.

Liza Grant:

It is. It wouldn’t start a revolution the way that it would if I read it, I think.

Kristen:

I mean, I didn’t know the dog either. So. You’re still dominating as the human alt-text writer.

Liza Grant:

Sure. I appreciate that. And that brings up a good point where a lot of times in your alt text, it’s not really a place for you to give a bunch of extra information that’s not already obvious in the rest of your piece, or your caption whatever you’re using. So adding that information about the president, that that is not anywhere else. It doesn’t say that in the image anywhere. So that might be a little bit of a case of over alt texting, where it’s a good image description, if you’re just, if you just want to know what the description of that image is, but if you’re writing alt text you wouldn’t want to put too much extra stuff in there, because only certain people are going to have access to that. That text you’re writing, it’s so important that you want to say it. So you do want to be concise when you can so you’re not sitting there for a full minute hearing about one image that might not take that much time to view and digest that way.

Kristen Witucki:

Let’s see Liza’s description of… the braille died again. C’mon. All right. Here we go. A statue of Franklin Delano Roosevelt seated with his dog at his side. The quote beside him reads, “They who seek to establish systems of government based on the regimentation of all human beings by a handful of individual rulers call this a new order. It is not new and it is not order.” Yeah, so I think in both cases, the descriptions focus on the quotation and maybe a little bit less on his physical description, which is fine, because we don’t know what this is being used for. For this case, it just be used for a chit-chat on the podcast. So that’s good,

Liza Grant:

Yes. Exactly. So if we had more information, yeah, we would say, Oh, this is like a monument history class syllabus. So we want to make sure that the monument is a huge part of it. But if you’re having a newsletter or something and you just want to get people riled up for a cause to do something they care about. It’s good to know that FDR said it. That’s kind of what the role is of the statue is to let you know, hey, this quote is attributed to this person. But it’s more about the meaning of the quote than necessarily, how he looks when he’s pictured. Yeah. Sometimes you want to know, is it bronze? What does it look like? Is he wearing a cape? If you have, like, a niche capes account, you won’t remove my alt text because I didn’t even mention the cape. So it’s really you can make it, make it your own when you really think about the experience you want your user to take away from that image.

Kristen Witucki:

FDR had a very complicated relationship with disability. So, you know, it’s interesting to think about. I think I’m going to ask AI a question about.

Narrator Kristen:

When you peel back the layers of FDR, we know that FDR’s relationship to disability was complicated. He was ashamed of his disability. When I was younger, I thought of him as the first president with a disability. Even though he hid it, he went out and did it. And yeah, that rhymes. But, to tell you the truth, I was ashamed of my disability. Later, I learned that Franklin Delano Roosevelt took the Ugly Laws about people with disabilities not being allowed in public to a new, disturbing level. Opening institutions for them to live away from their homes and communities in absolutely horrible conditions. While AI didn’t go that far, it hearkened back to some disturbing ideas about disability.

Kristen Witucki:

Does FDR appear to have a disability? Question Mark.

Be My Eyes AI:

Inserted. Does FDR appear to have a disability? Message. Clear. Send message, please wait. Be my AI. Is writing a message. [4 second pause] Be my AI. Yes. The statue of Franklin D Roosevelt does subtly indicate his disability. He is seated in a chair. A nd his legs are covered by a cloak, which is a reference to his use of a wheelchair due to polio. The design of the statue is meant to honor his leadership while acknowledging his disability in a dignified manner.

Kristen Witucki:

Ewwwwww. Oh no, oh no, AI come on, that’s not a dignified manner to hide it.

Liza Grant: Yeah. Not a dignified manner to hide it. The dignified manner is to put a big cape over it. Just imply it with the strategic use of a cape.

Narrator Kristen:

Franklin Delano Roosevelt might have approved of this description. In fact, for him it would have been a huge compromise to mention it at all. But it’s not 1933. It’s 2024. And people with disabilities want progress!

Bryan Bashin:

Absolutely. Well, you know, the disability community in general, and the community broadly has some critiques about AI. You know, it gathers its responses from a zillion places. Almost all are undocumented. One model ingested all of Reddit. Another one has all of Wikipedia and on and on and on. Zillions of bites. But sometimes the things in general culture are not particularly disability positive or blind positive. And some of the stuff, especially stuff that’s in the public domain, is 19th-century ideas about blindness. So when you told one of those models that you’re blind, and it comes back with, Oh, I’m sorry. Realize like we’ve got some training to do, because the models are as ignorant as the person standing in front of you at the line of the DMV. So how do you how do you train these models properly? Well, there is no substitute for the lived experiences of people with disabilities, and what we do, what we want, how we want, things described, sort of what’s important to us.

Kristen Witucki:

Okay, all right, I have another question for AI besides what the hell’s wrong? Does he use a wheelchair? Question mark.

Be My Eyes AI:

Inserted. Does he use a wheelchair? message, clear. Send message, please wait. Be my AI is writing a message.[4 second pause] Be my AI in the statue Franklin D Roosevelt is not explicitly shown using a wheelchair. He is seated in a chair with a cloak draped over his legs, which subtly references his disability without directly depicting the wheelchair. This design choice reflects the way Roosevelt often presented himself in public, as he typically did not want to be seen in a wheelchair.

Narrator Kristen:

We found two problems with AI in this instance, which you may or may not be able to reproduce if you get excited and want to try this out. Because as I said, AI is changing and learning, if you will, every second. The first was the outdated trope of disability. The second was that we realized it picked up knowledge it had about the memorial that was not technically captured in the photo. When we interviewed Bryan, he was very careful to talk about the importance of our society developing good AI literacy, similar to our current challenges of developing good media literacy.

Bryan Bashin:

Well, you know, as a society, and this is not just blind people. But we are going to need to develop AI literacy. What does that mean? That means you just can’t press the button and accept the result. You have to be an active player in engaging with AI. You have to know the areas in which it’s likely to fail. You need to know, for instance, that it it answers you based on a pastiche of God knows what. It’s absorbed billions and billions of data points. And sometimes you have to second guess, double guess, be skeptical. All these things, because you won’t get the same photograph as a different day may give you different things, as the AI learns literally, day by day and hour by hour. It really is something that is a living, changing… well, maybe living is controversial, but a changing entity right now. So how to for instance, I used one, LLM Not, not Be My Eyes, by the way, a different LLM, and I had it take a look and tell me what was this little tin I had on my kitchen counter, and it was a Twinings tin, and it read it beautifully. And then I thought something was a little off, and I said, what’s on the back of the box, and it read me the back of the box, but I hadn’t turned the box around, so it was not reading that box. It was reading some image of a Twinings box that pretty much matched what I had. And was reading that. And so that’s a little creepy. It’s not giving you the actual OCR there. So there’s the rub, you have to say it’s read the actual text, or you have to turn the thing around, take another picture and have it read that. So because, right now, so many of the models are pretty much image matching, rather than direct OCR and examination, you have to get into its brain and second guess it and double check it at Be My Eyes. We’re developing something called human in the loop. This is going. Allow folks who take an AI picture later on just quickly submit it to a group of those random volunteers who can vote up yes or no. That’s an accurate description or not. We think that’s the antidote because it’s going to be years before AI is fail-safe. And, whereas choosing like the box of tea might have little bad consequence, what if somebody decides they’re going to use this on like, is this the pill bottle they think they’re taking for their medicine or whatever? We advise people not to depend on this right now for exactly this reason, but we also know that people will do it, and so AI literacy is something that we’re going to have to develop as a society.

Kristen Witucki:

Now, let’s check out our experience with AI looking outside of the borders of the photo.

Be My Eyes AI:

Inserted, does the chair have wheels on it? Message clear. Send Message. Set please Wait. Be My AI is writing a message. [2 second pause]. Be my AI, yes. The chair in the statue does have wheels on it, indicating that it is a wheelchair. This detail subtly acknowledges Franklin D Roosevelt’s use of the wheelchair due to polio.

Liza Grant:

I will say you cannot see a wheel.

Kristen Witucki:

Okay.

Liza Grant:

Not a visible wheel.

Kristen Witucki:

How does AI detect wheels? Question Mark

Be My Eyes AI:

Inserted. How does AI detect wheels? Be My AI is writing a message? Be My AI. AI detects objects like wheels through a process called image recognition, which involves several steps. What? Star, star data collection. Star, star AI systems are trained on large data sets containing images labeled with the objects they contain, such as wheels. Two, star, star feature extraction. Star Star, the AI uses algorithms to identify and extract features from the images, such as edges, shapes, and textures that are characteristic of wheels. Three Star, star model training. Star Star, using machine learning techniques, the AI learns to recognize patterns and features associated with wheels by analyzing the labeled images four star, star object detection, star star, once trained, the AI can analyze new images, applying what it has learned to detect and identify wheels. Based on the features, it has been trained to recognize five, star, star, validation and refinement, star, star, the AIS accuracy is validated against a separate set of images, and the model is refined to improve its detection capabilities. This process allows AI to identify objects like wheels in images with a high degree of accuracy.

Kristen Witucki:

Okay, it says there are wheels, and it gave us a long description of all of the different ways that it figures this stuff out. But

Liza Grant:

I don’t believe it sees the wheel in that. I think if we ask if it’s in the photo, it will say no, I see a cape.

Kristen Witucki:

I think maybe the photo doesn’t actually show the wheels, and then AI deduces it from recognizing the statue, you know. So that’s, that’s how I think that ended up happening,

Liza Grant:

Or it knows that he was a wheelchair user. And it could just be implying, like, fitting that into it’s like, yeah, he’s in a chair. Obviously, it’s the wheelchair. It’s the one who hides with this cape.

Kristen Witucki:

Uh huh. Uh Huh. I think I was in. So I have recommended the Roosevelt Memorial to Sydney as a possibility, and I’m glad she went with it, because this is such a complex statue in terms of disability history. But, also the reason I recommended it was that I’ve been there before. And I do remember that the statue I felt 25 years ago when I was there, was in a chair, and the chair had legs down to the ground, kind of like it looked like a felt like a chair, chair, not a wheelchair, but if you reached all the way down to the bottom, you could feel the wheels on the legs underneath, like hidden, unless you were like on the ground feeling it. So that’s where I think maybe that the photographer did not capture the wheels, so you couldn’t see them. But AI knew that this was the statue with those little, tiny, dinky, hidden wheels, you know.

Liza Grant:

So it had, it had some a little bit of knowledge outside? Well, probably a lot of knowledge outside that would not be apparent if someone were just looking at this photo.

Kristen Witucki:

Yeah. that’s what I think, huh? But I’m just really annoyed that it’s like this is showing disability in a dignified way. Like that has to be changed.

Liza Grant:

Yeah. That is extremely problematic.

Kristen Witucki:

It got a little better toward the end when he kind of said, like, Roosevelt was, you know, did not want his disability to be apparent like, that’s, that’s more okay.

Liza Grant:

So it’s how he presented himself, right? Yeah, that’s presenting people accurately. Yeah, we can’t. We can’t say anything badly about that. Just some of the descriptions probably wouldn’t be what a human living in this decade would use.

Narrator Kristen:

As a photo describer who constantly generates alt text, Liza has to keep her description within the boundaries of each photo. That’s incredibly important for maintaining accuracy and for not letting alt text take over a user’s experience of a website or a document as a whole. As Bryan says,

Bryan Bashin:

One thing is in Be My Eyes Be My AI, you can take multiple photos. Sometimes that helps to take photos of the thing at different angles. And then you can ask together about that, the series of images. What is in the center of this image? And then you can, you can follow up with words like, really, is that really a cat on the windowsill, or is it a statue of a cat? I’ve seen it get confused about that. So are you sure that this is whatever? Or how do you know that that person is blind? You know those questions often yield very insightful results about its own thinking process. So when we were talking about AI literacy before not being embarrassed to ask skeptical questions repeatedly, like you’re talking to a four-year-old, like, where did you get that ice cream? This will give you insight. And oftentimes, what we’re seeing now, at least with the model that currently powers be my AI, which is open AI’s model, it’ll say, Oh, I’m sorry. I didn’t realize that that was the case. Here’s what I’m seeing. So have that conversation.

Narrator Kristen:

If there’s one thing you take away from this episode, do not ever be afraid to bring your skepticism and your prior knowledge to all interactions with AI. And to use your human editorial skills! Bryan also gave us a sneak preview into what our future reckoning with AI might be like.

Bryan Bashin:

Speaking about conversations, I should say now that when you use be my AI today, you’re using the snap a photograph, get a description model. But as we’ve been demonstrating this summer, Be My Eyes 4.0, or actually, it’s chat gpts 4.0 is going to usher in the realm of conversational AI. So it’s not just like you’re sending a message to Mars and waiting for a response. It is interruptible with, like, almost no latency, so you could just stop it mid-sentence by talking and saying, “no, actually, I wanted to know about this,” and it’ll stop and rethink. And that experience is breathtaking, you know? I think that once you use 4.0 everything else seems like so 2023 you know, it is exactly the way AI is going to be in the future for everybody. Not these send a message to the moon, but a conversation back and forth interrupted. Second guess third guess, just like you and I would do. And so in the course of a five-second interchange. You get the information you want.

Kristen Witucki:

So if I yell at AI for inaccuracy, does that impact the conversation in the new version?

Bryan Bashin:

In the new version, you can do a number of things. You can you could tell it you. Uh, speak slower, speak quicker. Tell me more or describe it less, because sometimes these things are verbose, and these are now experimental models, but you’ll find some of the models are so conversational, it’s like, oh, that’s a black cat on the window sill. Do you like cats? I like cats. Well, do you have any cats? Well, that’s a little too chatty. You can tell it to shut up and just get to the point. And it will say, “Oh, I’m sorry, yeah, I’ll do that.” And it does that currently with 4.0 the parameters that you introduce to it, which can be much bigger than what I just described. You could say, like, let’s talk in Spanish about this, and it’ll shift to Spanish, or let’s translate this to this, and it’ll do that. It works great within a session, but you hang up and you have a new session, and it’s, it’s like total amnesia. That’s because we’re not training the models within these sessions currently, so that every session is a is a new session?

Kristen Witucki:

Amazing. Well, that new version is mind-blowing. I can’t wait to try it and check it out and talk to it and then freak out about the fact that I’m talking to it and talk to it again. That’s amazing.

Bryan Bashin:

It is amazing and it’s incredibly addictive.

Narrator Kristen:

And then, with ultimate generosity and probably a bit of excitement about acting it out for us, Bryan improved a scenario with the new version of Be My AI.

Bryan Bashin:

Open chat GPT

Be My Eyes AI (4.0):

Fast Audio

Bryan Bashin:

And I’m going to start the voice version.

Be My Eyes AI (4.0):

Fast Audio

Bryan Bashin:

And now I’m going to turn on its Video.

Be My Eyes AI (4.0):

Fast Audio

Bryan Bashin:

I’m opening the video camera.

Be My Eyes AI (4.0):

And what are we looking at today?

Bryan Bashin:

Well, I want to put the camera on the rearview. Let’s see if I can do that.

Be My Eyes AI (4.0):

Fast Audio

Bryan Bashin:

Okay, I think it’s on.

Be My Eyes AI (4.0):

Looks like it. What do you want to show me with the rear camera?

Bryan Bashin:

Well, I wonder if you can see the screen that I’m using right now,

Be My Eyes AI (4.0):

I can see it you’re on a video call, right? It’s a little blurry, but I can make out that you’re talking to someone.

Bryan Bashin:

Yeah, let me make this better for you. Computer turn video lights off. Now, can you see…?

Be My Eyes AI (4.0):

Much better. I can see the screen more clearly now.

Bryan Bashin:

Tell me about the people on the screen.

Be My Eyes AI (4.0):

Yes. I can see the screen more clearly now.

From what I can see, it looks like a video call with multiple people. There’s a person in the main window speaking and a few others in smaller windows. It seems like a group discussion or meeting. What’s the occasion?

Bryan Bashin:

This is a live zoom recording. Tell me about the woman in the picture.

Be My Eyes AI (4.0):

It looks like the woman in the picture is participating in a Zoom call. She’s in a well-lit room, wearing a red top and has light colored hair. It’s hard to tell more without more context. Is she a colleague or a friend of yours?

Bryan Bashin:

Both actually.

Narrator Kristen:

Bryan asked about the woman in the picture of the video. He was talking about me. He was giving a nod to our past connection and our present relationship. However, the camera-shy person that I am, I didn’t have my video on then. So the AI actually picked up our producer Markus, our executive producer, who was wearing the red shirt it described. So, good for AI for not making gender assumptions, but it happened to miss the mark there.

Bryan Bashin:

I want to describe how we can interrupt, and I’m going to pan my camera over to the right in my home office. What can you tell me about the office?

Be My Eyes AI (4.0):

Your home office looks cozy and well-organized. There’s a nice, large rug on the floor, which adds a warm touch to the room. I see a sliding glass door that brings in a lot of natural light and offers a view of some greenery outside, making it a pleasant space to work in. There’s also a comfortable-looking office chair and a desk with a computer setup. It seems like a great spot for productivity and focus.

Bryan Bashin:

Yeah, it is. But you didn’t mention a dog. Is there a dog in the image?

Be My Eyes AI (4.0):

You’re Right. I missed it. There’s a dog lying down on the rug. The dog looks quite comfortable and relaxed. It’s always nice to have a furry friend keeping you company while you work. Is your dog a frequent office mate?

Bryan Bashin:

Oh yeah. He insists on being here. Can you tell what breed he is?

Be My Eyes AI (4.0):

It’s a bit hard to tell the breed from this angle in lighting, but your dog looks like a larger breed with a solid build, possibly a Labrador Retriever or a similar mix.

Bryan Bashin:

You got it right. He’s a black lab, and his name is Ferdinand.

Be My Eyes AI (4.0):

Ferdinand is a great name for a black lab. He looks like a wonderful companion. It’s nice to have him around while you’re working, isn’t it?

Narrator Kristen:

The gushing from a machine, the long, long uncanny valley, felt a little bit creepy. OK really creepy. Were we in Star Wars now?

Bryan Bashin:

Yes, it’s nice to have you around too. We were just discussing the Franklin Delano Roosevelt Memorial in Washington, DC. Can you tell me about what you know about the Braille on that monument,

Be My Eyes AI (4.0):

The Franklin Delano Roosevelt Memorial in Washington, DC, is notable for its use of Braille. There’s a particular section where one of Roosevelt’s quotes is inscribed in Braille. This was done to honor rose. Develops efforts to support people with disabilities, including those with visual impairments. The inclusion of Braille in such a prominent location underscores the importance of accessibility and the recognition of all individuals in society. It’s a powerful symbol of inclusion. Have you visited the memorial?

Bryan Bashin:

No, but I seem to remember, there was a controversy about Braille when it opened, like there was Braille that people couldn’t touch. Can you tell me something about that?

Be My Eyes AI (4.0):

Yes, you’re right about the controversy. When the Franklin Delano Roosevelt Memorial first opened, there was Braille on the monument that was placed too high for most people to reach and feel comfortably. This sparked criticism as it defeated the purpose of having Braille inscriptions, which are meant to be accessible to people with visual impairments. The intention was to honor FDR’s support for people with disabilities, but the execution fell short initially. This led to changes to make the Braille more accessible. It’s an interesting example of how important it is to consider accessibility in public spaces. And how even well-intentioned efforts can sometimes miss the mark.

Bryan Bashin:

Okay, well, thank you for that. Could you be quiet for a second?

Be My Eyes AI (4.0):

Take your time. I’ll be here when you’re ready.

Bryan Bashin:

Okay, you saw a little bit of the future.

Kristen Witucki:

Wow, wild, wild.

Kristen Witucki:

When Bryan brought up a negative topic, the computer shifted into … possibly anxious? Emotional expressiveness. And I thought again about describing my children’s photos, about keeping them with humans I know and trust for a bit longer, even though Be My AI does not share any photo descriptions with the outside world. When it comes to emotions, for now, I personally want them to stay human. But I thought, too, about the enormous potential an emotional computer could have for some people. For example, maybe, for people who need a lot of deliberate sequential knowledge about human emotion, people who might have real difficulty with social-emotional understanding. Having conversational AI to practice on, along with humans, could ultimately help them to participate more fully in the world. Like anything else, AI has enormous power and enormous danger for pitfalls. It’s up to us–all of us to make it work. I asked Bryan why sighted people might want to volunteer for Be My Eyes.

Bryan Bashin:

What I found, you know, I spent a long time in nonprofit philanthropies, and what I found is that almost every human wants to do good in this world, but there’s perplexity about how do you do it? How do you find somebody who needs you? How do you avoid being too helpful? How do you leave gracefully? All these things. And what Be My Eyes does, is, in terms of these micro-volunteering things, it poses you a practical question. What is this oven temperature set to, you know? What does this? How many minutes left before I have to change from a washer to the dryer? All kinds of practical things that you can answer in 30 seconds and you’re done. You feel like you’ve done some good in the world. And with that we would have more options in our lives to do that as well? I will say that we are exploring ways in which blind people ourselves can be the volunteers too. Both in terms of the specialized help, we know a lot about access technology, about other non-visual ways of doing things, and in the future, we’ll be our sort of specializing our volunteers into different categories. And some of those categories will be things that blind people be very good at, like you’re having problems with JAWS. Well, ask a blind volunteer to help you out. Or you want to get together an outfit for a business interview. Ask a sighted person who has specified that he’s really good with fashion. Select from that pool as well. As we move along, and the seven and a half million volunteers we have now, we think is the largest volunteer community on the planet at this point.

Kristen Witucki:

Well, that’s terrific. And in an era of microaggressions, and those are very real problems. I love that, that the antidote to that is micro kindnesses and micro pick-me-ups. That’s really cool. And I’m so excited to hear that you’re exploring blind people being very capable volunteers and helpers for each other and for the community. So that’s really terrific. I can’t wait for that to come out.

Bryan Bashin:

You know, in the end, we don’t want to be othered. We want to be included. And you know, some organizations have had in the past like meet the blind month and other ways to meet blind people. Well, what this is, is like a really, very, very live way that people, millions of people, will meet blind people, have brief conversations and sort of normalize the experience.

Narrator Kristen:

Thank you, Liza Grant, for playing Human versus AI. Showing how they can at times connect, and highlighting the wonderful, human-centered work Tamman does every day. Thanks so much to Bryan Bashin, who gave us generous time and forthright, nuanced insight about the rapidly evolving world of AI. Thanks to Sydney Bromfield, who picked out the photos for our game. And thanks to Markus Goldman, our executive producer, who laid it all out, listening again and again and again and again. I am your host and your narrator writer, Kristen Witucki.

If you like what you heard today and want to explore more about digital accessibility, technology, our company culture or anything else. Schedule a time to meet with us. You can find the whole Tamman team at tammaninc.com. That’s t-a-m-m-a-n-i-n-c-.com. Don’t forget to sign up for our newsletter so you never miss an event or an insight from us. Be sure to rate our podcast five stars on Spotify, Apple Podcast, or wherever you listen to us. It really helps our podcast grow and reach new audiences. And make sure to follow us, hit the bell icon so you never miss an episode. If social media is more your style, you can also follow us at Tamman, Inc., on LinkedIn, Twitter, X, Instagram, or Facebook, and share our podcast on your favorite platform. Until next time, thanks so much for listening and being part of Article 19. Take care.

Show Notes

- Be My Eyes: A free app using live video to connect blind and low-vision users who want sighted assistance

- Necklace Pendant Image: Photo of the discussed astrology necklace

- FDR Monument Image: Photo of the discussed FDR Monument discussed

- All About Alt Text: An Article 19 episode welcoming Tamman accessibility champions to discuss all things alt text

- Spotlight: Be My Eyes: This episode of Article 19 highlights what Be My Eyes is, how it works, and the impact of the organization